By Aubrey Blanche

You have probably experienced some version of this moment.

You contact a candidate to let them know they are not moving forward. You have your notes ready. You know what feedback you can share. You are prepared for the usual reactions.

But this time the candidate pauses and asks:

“Can you tell me more about how this decision was made? And were any AI tools involved in the process?”

It is a fair question. Candidates understand that AI is used in hiring. They want to know whether technology shaped their chances, influenced their assessment, or contributed to the final decision.

In that moment, the conversation shifts. You are not simply delivering feedback. You are being asked to explain a system. You are being asked to stand behind it.

And to do that, you need to understand not only whether AI was involved, but how it contributed and why a human made the final call.

This is where the real challenge appears.

To navigate this challenge, we partnered with AI and organizational ethics expert Aubrey Blanche. In her work on responsible AI in hiring, she is clear on one core principle: “If you cannot explain how an AI tool contributes to a hiring decision, you cannot defend the decision that follows.”

Why explainability matters

AI tools are now part of everyday hiring. They can help manage high volumes of applications and reveal relevant information quickly. They can make processes more efficient and more consistent if built and deployed correctly.

However, efficiency comes with a new kind of risk. If you do not understand how an AI tool reaches a recommendation, you lose the ability to justify the decision it helped shape (even if you haven’t automated decision making).

Aubrey frames explainability in practical terms: “Explainability means being able to understand and communicate how an AI tool reached a decision.”

This includes:

- Knowing what information the tool uses

- Understanding how the tool interprets that information

- Being able to summarize its influence in simple terms

- Recognizing where your human judgment fits in

You do not need technical knowledge. You only need clarity. Regulators expect this. Candidates do too.

If an AI system ranked a candidate lower, you should be able to explain the factors that influenced that ranking. If someone asks whether AI played a role in their rejection, you should know exactly what to say.

Without explainability, trust breaks down. And trust is at the heart of every fair hiring process.

Why accountability matters

Explainability is about understanding. Accountability is about ownership.

As Aubrey notes, “an algorithm does not take responsibility for its decisions, you and your organization do.”

Accountability means:

- AI can support decisions but cannot replace human judgment

- Humans must always review and interpret outputs

- The organization is responsible for outcomes involving AI

- Vendors often do not absorb the legal or ethical risk for you

A human in the loop is not a box to tick. It is the safeguard that protects fairness and prevents decisions that cannot be defended.

If you cannot explain an AI output, and you cannot stand behind it, your organization is exposed. Not technically, but ethically and legally.

How to put explainability and accountability into practice

The goal is not to become a technical expert. The goal is to stay in control of your process. These practical habits make that possible.

1. Choose tools you can explain

Ask vendors clear questions:

- How does the AI reach its decisions?

- What data does it rely on?

- What patterns does it look for?

- How do humans remain part of the decision?

Aubrey is direct on this point: “If vendors cannot provide clear answers, think twice before using the tool.”

2. Keep humans involved at every stage

AI can suggest, rank, and highlight. But it should never decide on its own. Humans should always:

- Review the recommendation

- Add context

- Make the final decision

3. Document how AI contributed

You do not need long reports. A short note is enough:

- How the tool was used

- What insight it provided

- Whether you agreed with it

- The reasoning behind your final decision

Documentation protects you, your candidates, and your company.

4. Practice transparent communication

Candidates want clarity. A simple statement can build trust:

“We use an AI tool to help organize applications, but every decision is reviewed by a recruiter.”

This shows fairness and reinforces that humans remain in charge. Ideally, you’ll have a sharable policy or guide outlining not only how you use AI, but how candidates are allowed to use it as well.

5. Align your hiring team

Accountability falls apart if recruiters, hiring managers, and interviewers give different descriptions of the process.

Take time to ensure your entire team understands:

- What your AI tool actually does

- How you describe it to candidates

- What level of human oversight exists

- What language is accurate and consistent

Consistency signals competence. Mixed messages create risk.

Expert Aubrey Blanche points out: “It’s important to make sure that your entire team is speaking the same language.”

The key takeaway

Explainability and accountability are the foundation of responsible AI use in hiring. They help you protect candidates, maintain fairness, and build trust.

If you cannot explain a decision, you should not let AI influence it.

By choosing tools you understand, keeping humans in control, documenting your reasoning, and communicating clearly, you create a hiring experience that is both effective and trustworthy.

This is how you balance compliance with innovation. Not by choosing one over the other, but by ensuring both support a hiring process that remains human at its core.

Ready to build real confidence with AI in your hiring process?

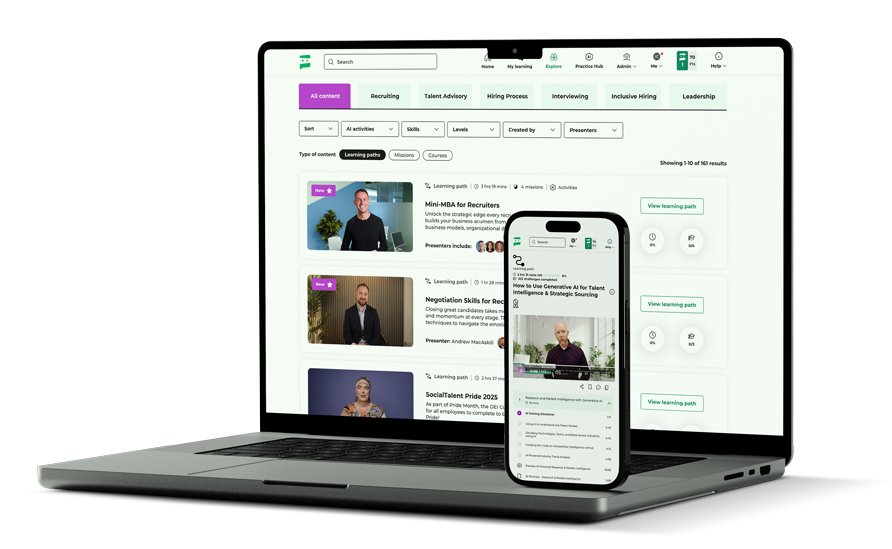

This article explores ideas from Hiring With Integrity: AI and Data Privacy, a learning path featuring AI and organizational ethics expert Aubrey Blanche on the SocialTalent platform. Check out the full learning path to explore these ideas in more depth and see how they apply across real hiring decisions.