By Johnny Campbell

I was at Talent Connect in San Diego a few weeks back, sitting in a conversation with several TA leaders who were venting about candidate cheating; from AI-generated resumes and highly coached interview responses to technical assessments completed with ChatGPT’s help.

The frustration was real and understandable, but after listening for a while, I asked a question that I didn’t realize was controversial: “Do you have an explicit policy about what candidates can and can’t do with AI?”

Silence.

“Then you can’t call it cheating if you haven’t defined the rules.”

Everyone’s Using AI, Nobody’s Talking About It

Here are the actual numbers that should concern you:

Nearly two thirds (63%) of job seekers are using AI at some point in their job search (CNBC). And that number is only going up.

43% of organizations used AI for HR tasks in 2025, up from 26% in 2024 (SHRM).

Let that sink in. Candidates are using AI at higher rates than companies are, and most organizations haven’t even acknowledged this reality, let alone set expectations around it.

The current approach (a sort of “don’t ask, don’t tell” policy) is untenable. It creates several problems:

For candidates: They don’t know what’s acceptable. Is using ChatGPT to polish their resume okay? What about practicing interview questions in an AI mock interview? Getting real-time coaching during a video interview? They’re left guessing where the line is.

For hiring teams: You’re operating blind. You suspect candidates are using AI but can’t address it directly. You’re making hiring decisions without knowing how much of what you’re seeing is authentic capability versus AI assistance.

For your organization: You’re exposed to legal risk, fairness issues, and inconsistent evaluation standards across different hiring managers and teams.

The solution isn’t to ban AI or pretend it doesn’t exist. It’s to be explicit about what’s acceptable and what’s not, for both candidates and your own teams.

What Leading Companies Are Doing Differently

While most organizations are still figuring out their stance, a handful of forward-thinking companies have published explicit AI policies for candidates. Not buried in legal T’s and C’s, but right on their careers pages.

Let me show you what they’re getting right:

Thoughtworks: Encouraging Responsible Use

Thoughtworks takes a refreshingly positive approach. Their policy explicitly encourages candidates to use AI responsibly for preparing for interviews (research, practice questions, mock interviews), crafting and organizing resumes to highlight relevant skills, and accessibility needs that level the playing field.

But they’re clear about where the line is: No AI-generated work submitted as your own in assessments, no real-time AI assistance during live interviews, no fabrication of experiences or credentials.

What I love: They explain how they use AI too (for administrative tasks, drafting messages, improving job descriptions) but are explicit that “all hiring decisions at Thoughtworks are made by people. AI does not screen, rank or reject candidates.”

Accenture: Clear Permitted vs. Prohibited

Accenture’s policy is structured around explicit do’s and don’ts:

Permitted: using AI to prepare and present your best self; research and interview preparation; resume structure and clarity.

Prohibited: generating false or misleading information; completing assessments with AI (unless explicitly stated otherwise); real-time AI assistance during interviews; voice cloning or deepfake tools.

They back this up with transparency about their own use: “We use AI to enhance – not replace – human decision-making” and outline their Responsible AI principles.

Rapid7: Detailed Role-Based Guidance

Rapid7 goes further with specific responsibilities and expectations for different stakeholders:

For candidates, they break down acceptable use by scenario:

- Interview prep: Yes

- Accessibility accommodations: Case-by-case

- Coding assistants: Allowed but you must be able to explain the code, identify bugs, and discuss improvements

- Live interview assistance: No

- Fabricating credentials: Absolutely not

For their TA team, they’re equally explicit about their own AI use and limitations, including what they won’t do (automated decisions, unreviewed outreach, scoring without human review).

SAP, Ericsson, and GoDaddy: Transparency Both Ways

These companies all emphasize mutual transparency: We’ll tell you when and how we’re using AI, and we expect you to use it responsibly.

SAP explicitly states they use AI for CV parsing and matching, but “all application decisions are and will always be made by qualified human recruiters and hiring teams.”

Ericsson treats AI like “a grammar or spell-checking tool” – fine for refinement, not for creation.

GoDaddy provides stage-by-stage guidance, from application through interviews, with clear expectations at each step.

This Matters More Than You Think

Publishing an explicit AI policy isn’t just about preventing “cheating.” It serves multiple strategic purposes:

1. Candidate Experience: Transparency builds trust. When candidates know what’s expected and what’s acceptable, they can prepare appropriately without anxiety or confusion. Ambiguity creates a terrible experience.

2. Fairness and Consistency: Without clear guidelines, different hiring managers will have different tolerances and different detection methods. One manager might accept AI-polished applications while another automatically rejects them. That’s not fair to candidates or defensible for you.

3. Legal Protection: As AI use in hiring becomes more regulated (EU AI Act and California’s SB 53), having documented policies about acceptable use (on both sides) becomes critical for compliance.

4. Quality of Hire: When you’re clear about what you’re trying to assess and how candidates can prepare, you actually get better signal. You’re evaluating the right things, not penalizing people for using tools responsibly.

How to Develop Your Own AI Acceptable Use Policy

If you’re reading this thinking “we need one of these,” here’s how to actually make it happen:

Step 1: Assemble the Right Team

This isn’t just a TA project. You need:

- TA leadership (owns the policy)

- Legal/Compliance (ensures it’s defensible and compliant)

- InfoSec/IT (addresses technical risks and detection)

- Hiring managers (must buy-in and enforce)

- DEI/ Accessibility (ensures accommodations are considered)

Step 2: Define Your Principles First

Before you write specific rules, agree on your philosophical stance:

- Are you encouraging responsible AI use or merely tolerating it?

- What are you actually trying to assess in your hiring process?

- How do you balance efficiency with authenticity?

- What’s your stance on accessibility and accommodation?

These principles will guide every specific decision you make.

Step 3: Map Acceptable vs. Prohibited Use

Break this down by stage of the hiring process:

Application Stage:

- Resume/CV structure and polish: Acceptable or not?

- Cover letter drafting: Where’s the line?

- Research about your company: Encouraged?

- Fabricating experience: Obviously prohibited, but how will you detect it?

Assessment Stage:

- Take-home assignments: Can they use AI as a tool? Must they disclose?

- Technical challenges: Coding assistants allowed? Under what conditions?

- Writing samples: How do you ensure authenticity?

Interview Stage:

- Preparation and practice: Encouraged?

- Real-time assistance: How do you define and detect this?

- Accessibility accommodations: What’s reasonable?

For each stage, also document how you’re using AI so there’s transparency both ways.

Step 4: Get Hiring Manager and Interviewer Buy-In

This is where most policies fail. Your hiring managers need to:

- Understand the policy and the reasoning behind it

- Know how to communicate it to candidates

- Feel comfortable assessing whether candidates are following it

- Have clear escalation paths for concerns

Run training sessions. Provide talking points. Make it easy for them to have these conversations.

Step 5: Make Your Policy Public and Accessible

Don’t bury this in legal terms. Put it on your careers page. Include it in application confirmations. Reference it in interview scheduling emails. Look at how Thoughtworks, Accenture, SAP, Ericsson, Rapid7, and GoDaddy have done this – simple, clear language on dedicated pages that candidates can find and reference.

Step 6: Build in Review and Revision

This is critical: Your policy must be iterative. AI capabilities are evolving monthly. Candidate behavior is changing. Regulations are being written. Your policy from today will be outdated in six months.

Set a clear revision schedule:

- Quarterly reviews: Are we seeing new AI use cases we didn’t anticipate?

- Semi-annual updates: Do we need to adjust permitted/ prohibited categories?

- Annual comprehensive revision: Is our fundamental approach still right?

Assign someone to own this. Track feedback from hiring managers and candidates. Monitor industry developments and regulatory changes.

Companies like Rapid7 explicitly acknowledge this in their policies: “As AI usage continues to evolve, we want to be clear to our candidates, interviewers, hiring managers, and our Global TA team around both acceptable and prohibited use.”

The Templates Are Out There, Use Them

You don’t have to start from scratch. The companies I’ve mentioned have published their policies publicly:

- Thoughtworks: Great for a principles-based, encouraging approach

- Accenture: Clear permitted vs. prohibited structure

- Rapid7: Detailed, role-specific guidance

- SAP, Ericsson, GoDaddy: Strong transparency both ways

Study these. Borrow structure. Adapt to your context. But don’t wait for perfect – get version 1.0 out and iterate from there.

What Happens If You Don’t Do This?

Let me be blunt about the risks of inaction:

You’re already making hiring decisions based on AI-assisted applications and you don’t know it. More than half your recent hires likely used AI somewhere in the process, are you okay with that? Do you even know how it affected your evaluations?

Your hiring managers are operating with different standards. Some are probably more tolerant of AI use, others are suspicious of everything. Without a unified policy, you’re creating an inconsistent, potentially discriminatory process.

You have no defense when something goes wrong. Whether it’s a candidate who completely fabricated their credentials using AI, or a discrimination claim based on how different candidates were treated, you have no documented standards to point to.

You’re losing great candidates who are confused about expectations. The best candidates – the ones with options – don’t want to play guessing games about what’s acceptable. Ambiguity drives them to competitors who are clearer.

We’re past the point where you can pretend AI isn’t part of your hiring process. Candidates are using it. You’re probably using it. The question isn’t whether to address it – it’s whether you’ll do it proactively or reactively.

The companies getting this right aren’t the ones trying to ban AI or catch people “cheating.” They’re the ones being explicit about expectations, transparent about their own use, and creating fair, consistent standards that apply to everyone.

You can’t call it cheating if you haven’t defined the rules. So define them.

Build your policy. Get alignment. Make it public. Review it regularly. Enforce it consistently.

And most importantly: stop treating AI use as a moral failure and start treating it as a reality that requires thoughtful policy and clear communication!

Does your organization have an AI acceptable use policy? What challenges are you facing in creating one? Connect with me on LinkedIn and let me know!

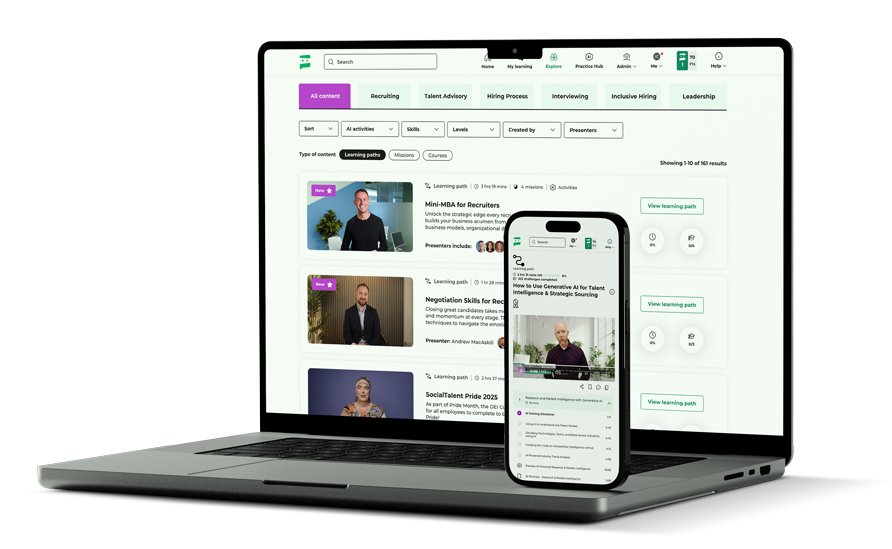

Want more content like this? Check out SocialTalent.com today!